Polyglot Data Modeling

polyglot | \ ˈpä-lē-ˌglät \ : speaking or writing several languages : multilingual

For decades, data modelers have used 3 levels: conceptual, logical, and physical. So what's a "polyglot" model, and why is it needed?

Traditional Data Modeling

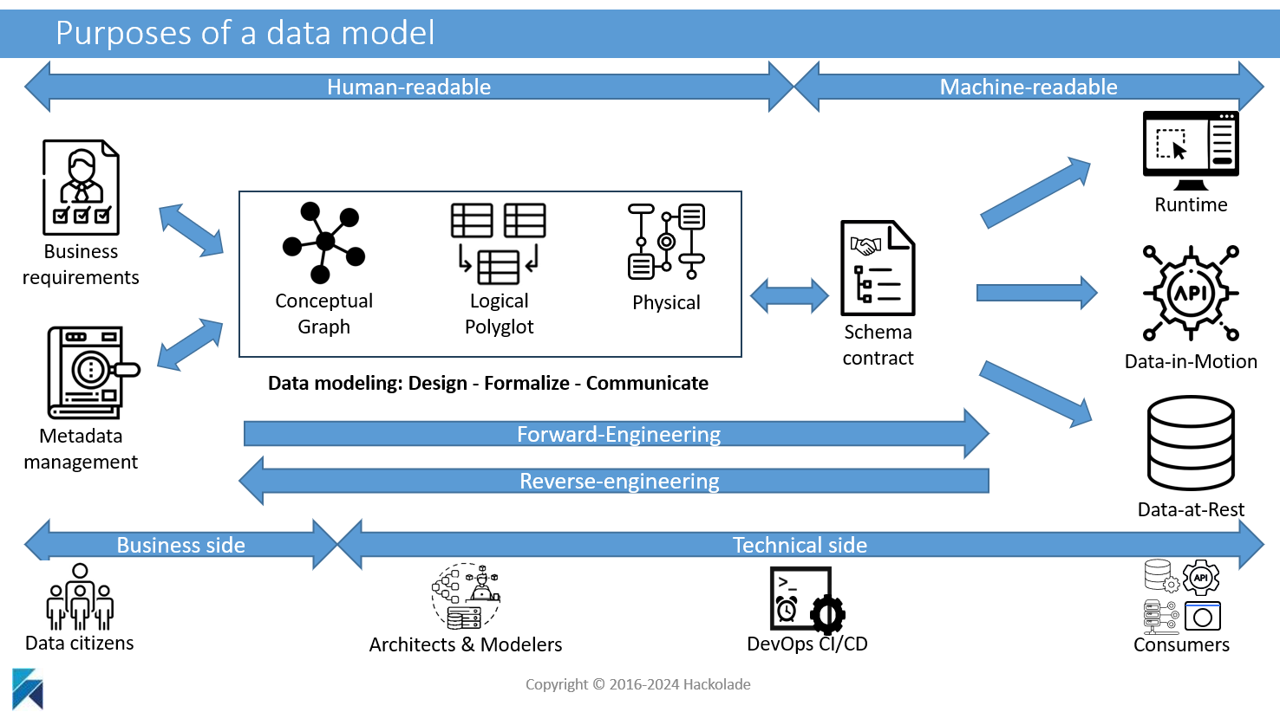

A data model is a formal representation of business requirements for the information systems of an organization. It is a design tool that produces a blueprint for software applications, and a communication tool to align stakeholders through a shared understanding of the meaning and context of data.

The modeling process enables to discover and document how data from different parts fit together. Models are easily understandable by humans when using a diagram with text and symbols, typically an Entity-Relationship diagram or a graph diagram.

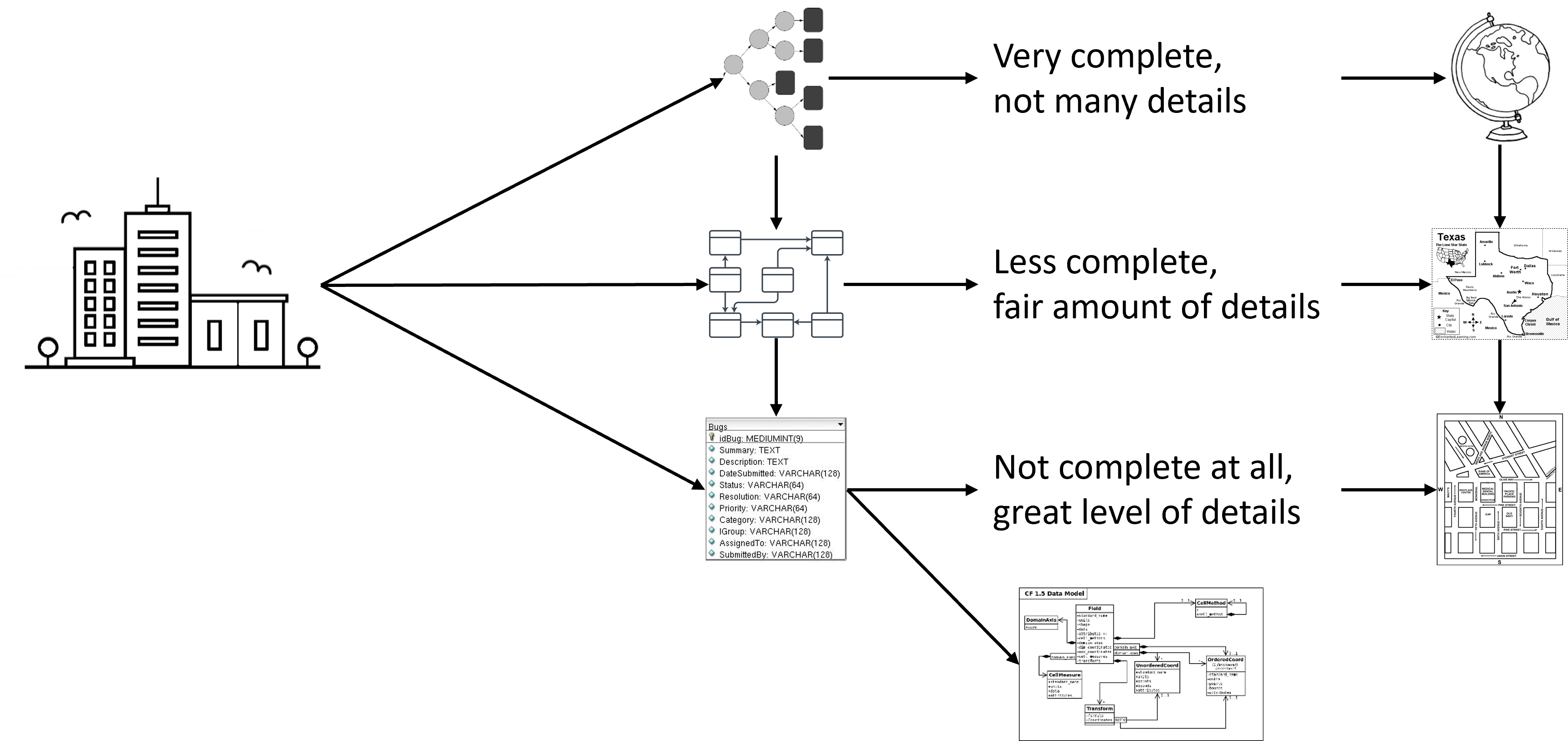

Three different levels of data modeling address different needs:

At a high level, often called "conceptual", the purpose is to align the stakeholders on the vocabulary and the scope of the effort, based on the requirements expressed by domain subject-matter experts. This process is formalized through the identification of the concepts and materialized with the definition of entities (with their attributes), and relationships (with their cardinality) between these entities.

At the next level, often called "logical", the purpose is to refine the model by capturing business rules and ensure the integrity of the solution. This is materialized by constraints that will enforce the business rules. While thinking about the technology solution that will be needed in the end, it is good to remain "technology-agnostic" at this stage. The solution could be implemented using different technologies.

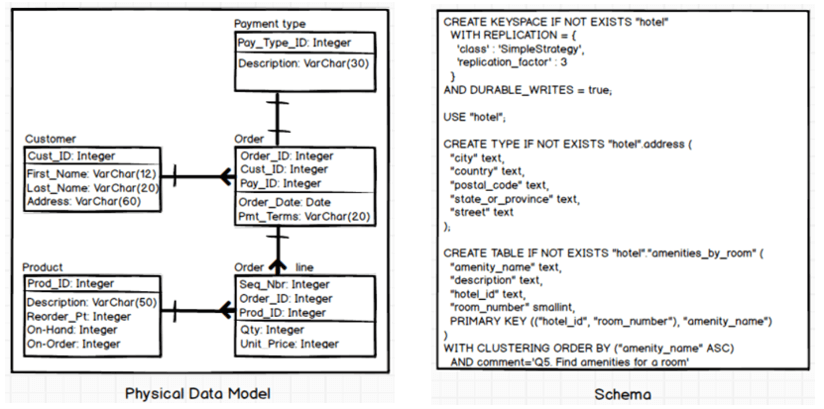

At the third level, often called "physical", the purpose is to design the technical implementation, including many of the details for the solution. From this physical data model, it becomes possible to generate schema artifacts such as DDLs, a machine-readable contract to create the structure of a database.

Let's consider a traditional description of the different levels of data modeling:

| Features | Conceptual | Logical | Physical |

|---|---|---|---|

| Entity names | X | X | |

| Attributes | X | X | |

| Entity relationships | X | X | |

| Relationships cardinality | X | X | |

| Primary keys | X | X | |

| Foreign keys | X | X | |

| Table names | X | ||

| Column names | X | ||

| Column data types | X |

The above process has been adequate for decades of working with relational databases.

Support for modern technologies

In the above levels, it is generally understood that a logical model must be "technology-agnostic" and should respect the rules of normalization.

It becomes quickly obvious that a logical model can be technology-agnostic only if the target is strictly RDBMS. But as soon as you need for the logical model to be useful with other technologies, the strict definition of a logical model becomes too constraining. Given that Hackolade Studio supports so many technologies of databases and data exchanges that are not RDBMS, it was necessary to free ourselves from the strict constraints of the definition of logical models. To emphasize the differences, we found that using a different word -- polyglot -- was necessary to avoid unnecessary semantic discussions.

Today's big data not only allows, but promotes denormalization and the use of complex data types, which are not compatible with the strict definition of logical modeling. Plus, physical schema designs are application-specific and query-driven, based on access patterns.

While it is fairly straightforward to go from Logical to Physical in a relational world because the databases implement different dialects of the same SQL specification, it is not the case with NoSQL or analytical big data.

A Polyglot Data Model for your Polyglot Data

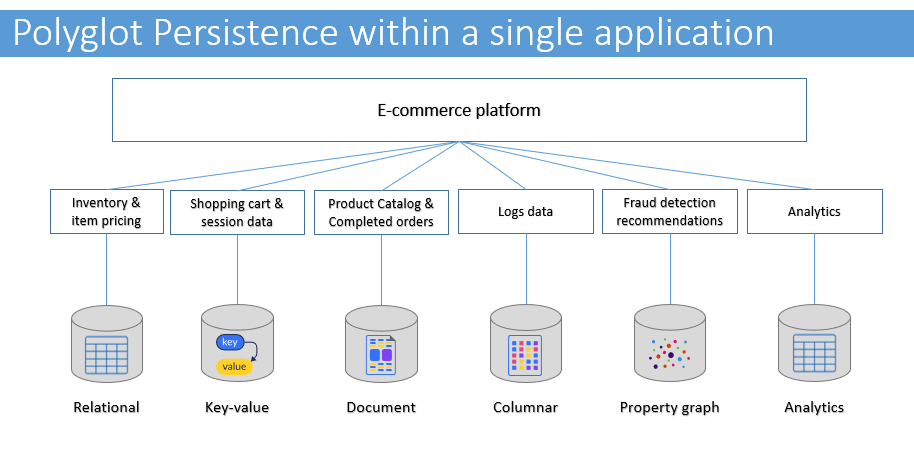

There is a need for a data model which allows complex data types and denormalization, yet can be easily translated into vastly different syntaxes on the physical side. We call it a "Polyglot Data Model", a term inspired by the brilliant Polyglot Persistence approach promoted by Pramod Sadalage and Martin Fowler in their 2013 book NoSQL Distilled: A Brief Guide to the Emerging World of Polyglot Persistence.

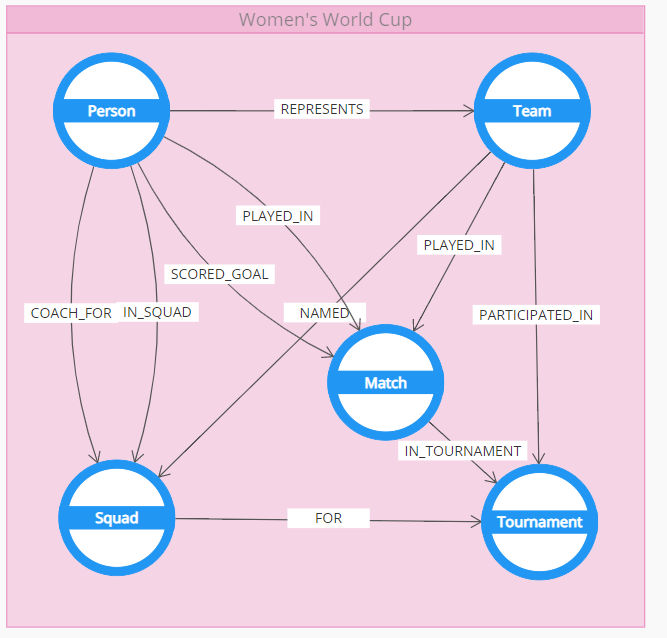

In our experience with customers, we observe two different types of polyglot persistence. The first type is the one originally described by Martin Fowler: best-of-breed persistence technology applied to the different use cases within a single application:

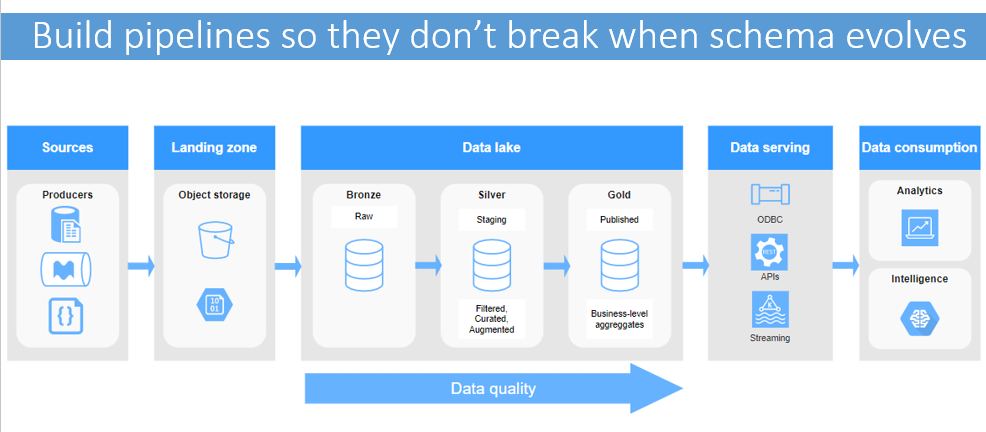

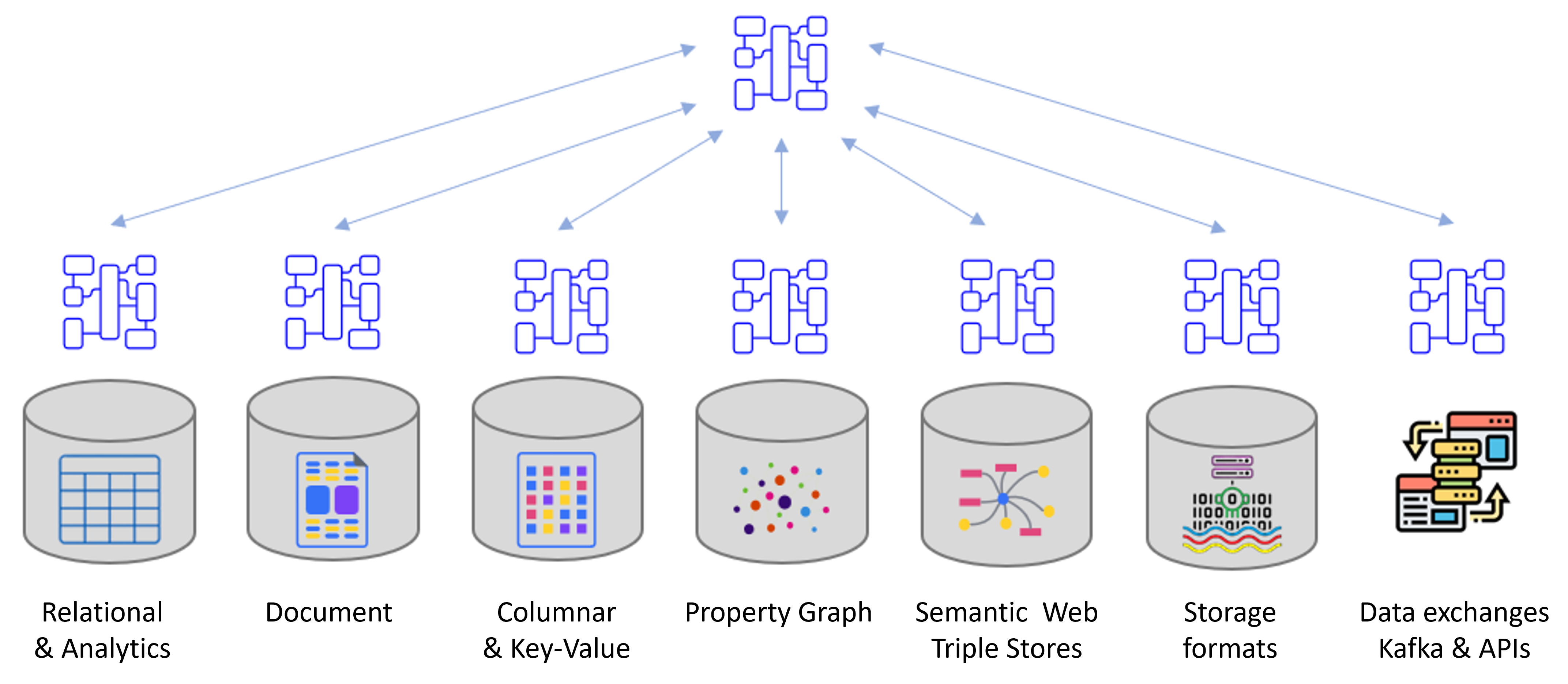

A second type of polyglot persistence is even more pervasive: data pipelines from operational data stores, though object storage and multi-stage data lakes, streamed or served via APIs to self-service analytics data warehouses, ML and AI.

In either case, customers are expecting from a modern data modeling tool that it helps design and manage schemas across the entire data landscape.

In our definition, a Polyglot Data Model is sort of a logical model in the sense that it is technology-agnostic, but with additional features:

- it allows denormalization, if desired, given query-driven access patterns;

- it allows complex data types;

- it generates schemas for a variety of technologies, with automatic mapping to the specific data types of the respective target technologies;

- it combines in a single model both a conceptual layer in the form of a graph diagram view to be friendly to business users, as well as an ER Diagram for the logical layer.

Our Polyglot Data Model also allows conceptual data modeling capabilities. We do this by leveraging the principles of Domain-Driven Design, including aggregates to store together what belongs together, and a graph view to represent concepts in a graph diagram that business users often find more friendly and less intimidating than Entity-Relationships Diagrams.

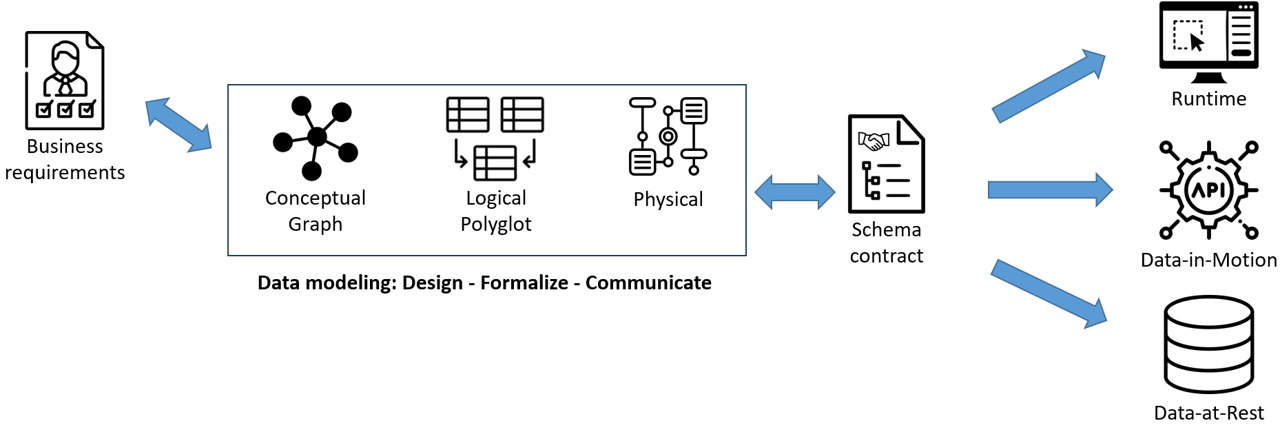

Data Model vs Schema Design

A data model is an abstraction describing and documenting the information systems of organizations. Data models provide value in design, formalism, understanding, communication, collaboration, and governance, ... They help document the context and meaning of data.

But the value of data models at a technical level is in the artifacts they help create: schemas. A schema is a “consumable” collection of objects describing the layout or structure of a file, a transaction, or a database. A schema is a scope contract between producers and consumers of data, and an authoritative source of structure and meaning of the context.

The Polyglot Data Model concept was built so you could create a library of canonical objects for your domains, and use them consistently across physical data models to produce schema contracts for different target technologies. Then synchronize across the metadata Business and IT to foster a shared understanding of the meaning and context of data. This is best done with Metadata-as-Code.

To learn more, make sure to visit our online documentation